This will be another post about the mysterious Voynich Manuscript, with apologies to those of my readers and subscribers whose cup of tea this isn’t. If you’re new to the topic, you may wish to find a different entry point, since I’ll be picking things up here some distance down the rabbit hole already.

I’ve been trying lately to come up with some way to analyze Voynichese text that can accommodate patterning on multiple levels simultaneously—what glyphs can be adjacent to each other, what word structures are valid, what sequences of words are most probable, what phenomena tend to appear in particular parts of lines, and what phenomena tend to appear in particular parts of paragraphs. The patterning we can detect on all these levels seems just as likely to be interconnected somehow as not, and perhaps even to result straightforwardly from the interplay among a relatively simple set of rules. And if that’s true, then I’m not sure we should be able to account satisfactorily for any one of the levels by itself without accounting for all of the levels at once; for example, it might not be possible to formulate a fully satisfactory “word grammar” in isolation from considerations of line and paragraph structure if these all depend to some degree upon one another. With that in mind, I’ve started trying to develop a scalable model around individual choices of glyph and whatever contextual features can be shown to affect their probabilities, whether closer by or more distant. Some pieces of this model have been easy enough to tackle, while others still seem almost impossibly difficult. But so far it’s been feeling like a promising approach overall—maybe as a kind of brute-force pattern detection mechanism, if nothing else.

In what follows, I’ll be treating ch, Sh, cTh, cPh, cKh, and cFh as indivisible units for purposes of analysis. Like many other people, though, I’ve had some trouble coming up with a good strategy for handling i and e, which routinely appear repeated next to each other. It wouldn’t make much sense just to assess the probability of, say, one i following another i, given that double ii is actually more common than single i. What I’ve done—where applicable—is first to assess the probability of a transition to any quantity of e or i, and then to assess the probability of a specific quantity of e or i as a separate step. For example, I might first consider the probability of che, chee, or cheee as opposed to cha, cho, etc.; and then, afterwards, the probability of che, chee, or cheee specifically.

§ 1

Microscale Analysis

Let’s kick things off with the following question:

Given one glyph of any particular type, what is the probability—expressed as a percentage—that the next glyph will be of a particular type, ignoring spaces?

This amounts to trying to analyze Voynichese glyph sequences as first-order Markov chains, by means of first-order Markov modeling. I’ll acknowledge up front that this approach is too simple to account for every detectable pattern, and that the picture which emerges from it will be a bit simplified and stylized. But sometimes a simplified and stylized picture can be heuristically useful, so I hope you’ll indulge my experiment with that in mind. Some terminology:

- Any given permutation of two glyphs such as k_a is a bigram.

- The step rightward from one glyph to another, such as from k to a, is a transition. We can notate this as k>a to distinguish it from k_a as a bigram.

- The probability of a transition from one glyph to another, such as k>a, is its transitional probability, not to be confused with the frequency of the k_a bigram itself.

- The whole set of probabilities of different glyphs following after any one given glyph, such as the probabilities of all different glyphs following after k, constitute that glyph’s transitional probability matrix, with the total adding up to 100%.

I know there have already been plenty of studies of Voynichese in terms of its frequencies and probabilities and entropy (a good entry-point to which can be found here), but these generally seem to have pursued higher-level questions formulated with natural-language plaintext as their point of reference: whether the text obeys Zipf’s Law, how its overall statistics compare to those of texts in languages such as Latin, Hebrew, or Hawaiian, and so on. Meanwhile, past efforts to model finer structural details have tended to focus primarily on the morphology of “words,” or glyph sequences with space on either side. Rather than trying to work out an independent transitional probability matrix for each glyph, these efforts have typically proceeded by identifying series of “slots” and then tallying the various configurations of these “slots” and the various glyphs or combinations of glyphs that can fill each of them. Jorge Stolfi’s work is the paradigmatic example (no pun intended).

But I’ve been looking for a way to develop a different kind of morphology ever since I began to suspect that the patterns we should be interested in may not respect word boundaries. After all, the spaces don’t seem as though they could carry much if any meaning. It’s not unusual for them to be visually ambiguous, and if we were to remove all of them, we could reconstruct where they go with over 95% accuracy by inserting them according to the following rules (introduced in an earlier post, at section four):

- Before q

- After g, m, n

- After r except before y, i

- After s before ch (with or without inserted gallows), Sh, d, l

- After y except before t, k

- After l before r, o, d, ch (with or without inserted gallows), Sh

- Between repetitions of o, s, l

Even the vast majority of exceptions to the above rules involve fewer than twenty specific bigrams starting with l, r, y, o, and s, and the same bigrams also tend to be spaced ambiguously the most often, judging from the incidence of “comma breaks” in the Zandbergen transcription. There thus appears to be a distinction inherent to the script itself between strong breakpoints which are pretty much obligatory and ambivalent breakpoints which can go either way. These patterns seem as consistent as any associated with a conventional “word grammar,” but if we limit our study to the forms of discrete words, we won’t detect them. Insofar as such patterns have been studied in the past, they’ve generally been approached in practice as the stuff of syntax rather than morphology (with notable contributions ranging from Prescott Currier to Emma May Smith and Marco Ponzi); that is, there’s the matter of how words are put together according to tight formal constraints, and then there’s the separate matter of tantalizing correlations between the endings of those words and the beginnings of the words that next follow them. Maybe these really are separate phenomena. But I don’t want to assume they are, and I’d like to analyze them in some way that can accommodate the possibility that they’re not. With that in mind, combining glyph-by-glyph transitional probabilities with spacing rules is the simplest strategy it occurs to me to try.

If we calculate a transitional probability matrix for each Voynichese glyph type, disregarding spaces, we find not only that each glyph favors some other glyph to follow it more than any others, but that it often does so by a wide margin over the next most preferred option. I’ll refer to the most probable transition for a given glyph as its “first option,” with other transitions ranked as “second option,” “third option,” and so forth.

Transitional probabilities are so strikingly different between the two “languages” Currier A and Currier B that there’s scarcely any value in calculating them across the whole manuscript. So let’s start by taking a look at a list of just the first and second options for each reasonably common glyph type in Currier B, together with the percentages of cases involved. Note that for glyphs in second position, I use i+ and e+ to indicate any quantity of i or e glyphs, following the logic laid out in my introduction. Although I give percentages to two decimal places because that’s what my scripts report, I don’t mean to imply that this degree of precision is meaningful.

- a>i+ (47.76%); a>r (23.60%)

- cFh>y (35.71%); cFh>e+ (25.00%)

- ch>e+ (57.94%); ch>o (11.99%)

- cKh>y (58.79%); cKh>e+ (24.18%)

- cPh>e+ (42.70%); cPh>y (17.98%)

- cTh>y (53.70%); cTh>e+ (23.55%)

- d>y (63.71%); d>a (25.87%)

- e>d (47.36%); e>y (19.85%)

- ee>d (39.79%); ee>y (37.96%)

- eee>y (50.38%); eee>d (27.48%)

- f>ch (52.38%); f>a (18.65%)

- g>a (28.57%); g>o (14.29%)

- i>n (72.16%); i>r (20.34%)

- ii>n (95.13%); ii>r (3.44%)

- iii>n (90.70%); iii>l (3.10%)

- k>e+ (41.86%); k>a (35.94%)

- l>k (19.85%); l>ch (19.73%)

- m>o (35.25%); m>ch (26.62%)

- n>o (33.11%); n>ch (26.05%)

- o>k (30.31%); o>l (23.25%)

- p>ch (55.95%); p>o (16.42%)

- q>o (97.69%); q>e+ (1.17%)

- r>a (29.25%); r>o (27.99%)

- s>a (47.18%); s>o (26.53%)

- Sh>e+ (68.81%); Sh>o (11.75%)

- t>e+ (33.74%); t>a (33.03%)

- y>q (28.11%); y>o (17.96%)

The transitions shown in blue involve relatively strong breakpoints, while the transitions shown in red involve relatively ambivalent ones (with at least 100 cases going each way in Currier B). Transitions that require or permit spacing tend to have weaker preferences for first options than transitions that almost always appear written together, but not overwhelmingly so, and not invariably. Meanwhile, the glyph n transitions ~59% of the time to either its first or second option—with a strong breakpoint—which isn’t far behind the ~61% we see for cPh or cFh, both of which strongly favor being written together with the following glyph. Thus, transitions between words appear to have constraints comparable to those within words. And transitions are rather tightly constrained on the whole. The only glyphs that don’t have at least a 50% probability of being followed by either their first or second option are l (~40%), y (~46%) and g (~44%).

If we now trace the most probable single course forward from glyph to glyph in Currier B—the path of least resistance, if you will—we discover the following closed loop:

- q>o (97.69%)

- o>k (30.31%)

- k>e+ (41.86%)

- more specifically k>ee (22.72%; 54.28% within k>e+)

- ee>d (39.79%)

- d>y (63.71%)

- y>q (28.11%)

This default loop generates the sequence qokeedyqokeedyqokeedyqokeedy….; and since there’s just one strong breakpoint at the adjacency y_q, and no ambivalent breakpoints, it would predictably appear broken up into words as qokeedy.qokeedy.qokeedy.qokeedy. Of course, this happens to resemble one of the most conspicuous kinds of repetitive pattern we actually see in Currier B.

Meanwhile, the most probable path leading forward from every glyph that isn’t itself in the qokeedy loop nevertheless leads into it sooner or later. Some reach it by way of e: ch>e, Sh>e, cPh>e. Others reach it by way of y (cFh>y, cKh>y, cTh>y) or k (l>k). Yet others reach it indirectly by way of ch>e: f>ch, and p>ch. And some reach it by way of o:

- a>i+ (47.76%)

- more specifically a>ii (26.65%; 55.80% within a>i+)

- ii>n (95.13%)

- n>o (33.11%)

The qokeedy loop is closed if we choose the most probable option for each transition within it, but it’s more likely to permit escape in some places than in others. Here are all the options we encounter as we pass through the loop with at least a 10% probability.

- q>o (97.69%)

- o>k (30.31%); second option = o>l (23.25%); third option = o>t (18.63%)

- k>e+ (41.86%); second option = k>a (35.94%)

- more specifically k>ee (22.72%; 54.28% within k>e+); second option = k>e (17.20%; 41.09% within k>e+)

- ee>d (39.79%); second option = ee>y (37.96%); third option = ee>o (11.98%)

- d>y (63.71%); second option = d>a (25.87%)

- y>q (28.11%); second option = y>o (17.96%); third option = y>ch (11.51%)

If we were to start at q and try taking each of these alternative options in turn, but all first options thereafter, we would hypothetically get:

- second option: qolkeedy.qokeedy….; third option: qotedy.qokeedy….

- second option: qokaiin.okeedy….

- second option: qokedy.qokeedy….

- second option: qokeey.qokeedy….; third option: qokeeokeedy….

- second option: qokeedaiin.okeedy….

- second option: qokeedy.okeedy….; third option: qokeedy.chedy….

Similarly, if we select some other starting glyph outside the loop, its path forward will present its own set of alternatives, as in this example:

- a>i (47.76%); second option = a>r (23.60%); third option = a>l (20.89%)

- within a>i, more specifically a>ii (26.65% of same total); second option = a>i (20.06% of same total)

- ii>n (95.13%)

- n>o (33.11%); second option = n>ch (26.05%); third option = n>Sh (13.81%)

If we were once again to try taking each of these alternative options in turn, but all first options thereafter, we would get:

- second option: ar.okeedy.qokeedy….; third option: alkeedy.qokeedy….

- second option: ain.qokeedy….

- second option: aiin.chedy.qokeedy….; third option: aiin.Shedy.qokeedy….

All this gives us a conceptually simple model of default behavior for Voynichese in which the tendency of the script is to repeat in an endless qokeedy loop unless it gets knocked out of it through a transition to some less probable option, as well as to fall back into the loop if and when it ever escapes from it.

So how do the model sequences I generated above for single-glyph deviations from the qokeedy loop match up against the actual text? A slim majority of them do in fact turn up multiple times, and we can generally find similar words and sequences to those that don’t.

- qokedy.qokeedy (15)

- qokeey.qokeedy (9)

- qokeedy.chedy (7)

- aiin.Shedy.qokeedy (6)

- qotedy.qokeedy (5)

- qokeedy.okeedy (4)

- aiin.chedy.qokeedy (4)

- ain.qokeedy (3)

- qokaiin.okeedy (1)

- ar.okeedy.qokeedy (1)

- qolkeedy.qokeedy (0), but qolkeedy.qokedy (3)

- qokeeokeedy (0), but qokeeoky (1); qokeokedy (1)

- qokeedaiin.okeedy (0), but qokeedaiin (2)

- alkeedy.qokeedy (0), but alkedy.qokedy (1); alkeedy (5)

I note in particular the similarity of the unattested qokeeokeedy to the words qokeeoky and qokeokedy, both of which would be considered irregular by most “word grammars.” It’s also worth mentioning that when I went searching for the predicted sequences, I seemed to find them turning up disproportionately often in Quire 13 (ff75-84) or Quire 20 (ff103-116).

We can also work in the other direction by taking actual glyph sequences from the text and seeing how our nascent model would analyze them. Here I’m going to draw on a dataset I created by removing all word and line breaks and identifying all glyph sequences that repeat exactly at least twice; I’ll call it the Repetition Dataset. It isn’t completely compatible with my present glyph adjacency analysis because it includes sequences that cross line breaks, but it’s still a handy point of reference. The longest exactly repeating sequences contained in it all seem to adhere rather closely to the qokeedy loop. All sequences in the following list occur twice unless otherwise stated.

- edyqokedyqokeedyqokeedy (plus another occurrence of just edyqokedyqokeedyqoke)

- olchedyqokainolSheyqokain

- okaiinShedyqokeedyqotedy

- edyqokaiinchedyqokeedyl

- keedyqokedyqokedyqoke

- edyqokeedycheedyqokeed

- edyqotedyqokeedyqokee

- edyqokeedyqokeedyqoke

- ealqokeedyqokeedyqoke

- olShedyqokedyqokeedyqo

- Shedyqokedyqokeedyqoke (3 occurrences; note overlap)

- Shedyqokedyqokeedyqot (note overlap)

- edyqokeedyqokedyqoke (3 occurrences)

- ysolkeedyqokeedyqoke

- okeedyqokeedyolkeedy

- edyqokeedyqopchedyqok

- eedyqolchedyqokeeyqoke

- qokeedyqotedyqokeedy

- Shedyqokalchedyqokaiin

- edytedyolSheedyqokeey

- edyqokaiinolkeedyqok

- keedyqokeedycheyqokee

- eedyokainchedychedytee

We see a lot of alternation here between ee and e, which we can analyze as substituting the second option k>e within k>e+. If we disregard this alternation in quantities of e, we’re left with the points of departure from the loop which I’ve highlighted below in red, with brackets indicating a “dropped” glyph.

- olchedyqokainolShe[d]yqokain

- okaiinShedyqokeedyqotedy

- edyqokaiinchedyqokeedyl

- edyqokeedycheedyqokeed

- edyqotedyqokeedyqokee

- ealqokeedyqokeedyqoke

- olShedyqokedyqokeedyqo

- ysolkeedyqokeedyqoke

- okeedyqokeedy[q]olkeedy

- edyqokeedyqopchedyqok

- eedyqolchedyqokee[d]yqoke

- qokeedyqotedyqokeedy

- Shedyqokalchedyqokaiin

- edytedy[q]olSheedyqokee[d]y

- edyqokaiinolkeedyqok

- keedyqokeedyche[d]yqokee

- eedy[q]okainchedychedytee

In these examples, we can spot a number of apparent substitutions for smaller or larger stretches of the default qokeedy glyph sequence. Each case can be explained by just one or two less-probable transitions, as follows.

For o_k_ee:

- o_lk_ee, takes second option o>l.

- o_t_ee, takes third option o>t.

- o_pch_ee, takes sixth option o>p (2.88%).

- o_lch_ee, takes second option o>l, then second option l>ch (19.73%).

- o_lSh_ee, takes second option o>l, then fourth option l>Sh (10.72%).

For y_q_o:

- y_o, takes second option y>o.

- y_s_o, takes tenth option y>s (2.16%), then second option s>o (26.53%).

For ee_d_y:

- ee_y, takes third option ee>y.

For y_qok_ee:

- y_ch_ee, takes third option y>ch.

For k_eedyq_o:

- k_ain_o, takes second option k>a; then regular a>i+ but second option within that, a>i.

For k_ee or k_eedyqok_ee:

- k_aiinch_e, takes second option k>a, then first options a>ii>n, then second option n>ch.

- k_aiinSh_e, takes second option k>a, then first options a>ii>n, then third option n>Sh.

The ways in which these longer sequences diverge from the qokeedy sequence may look rather random at first glance, but each can be shown to represent the unfolding of just one or two less-probable transition choices (and wherever there are two, the second might turn out on further analysis to be a consequence of the first—but we’re not there yet).

Of course, there’s a big chunk of the manuscript we haven’t yet considered. When we calculate an equivalent set of transitional probability matrices for Currier A, many of the first options turn out to be different (these cases are marked below with asterisks). Blue and red color-coding is applied as before, except that I’ve lowered the threshold for ambiguity to at least twenty cases going either way because of lower overall counts in this “language.”

- a>i+ (51.96%), a>l (19.37%)

- cFh>y (28.95%), cFh>o (26.32%)

- *ch>o (45.67%), ch>e+ (25.10%)

- cKh>y (34.80%), cKh>e+ (31.35%)

- *cPh>o (33.94%), cPh>e+ (28.44%)

- *cTh>o (38.27%), cTh>y (30.38%)

- *d>a (50.40%), d>y (26.73%)

- *e>o (51.92%), e>y (28.65%)

- *ee>y (51.82%), ee>o (28.24%)

- eee>y (43.02%), eee>s (26.74%)

- f>ch (44.44%), f>o (26.26%)

- g>o (35.29%), g>ch (17.65%)

- i>n (56.09%), i>r (26.21%)

- ii>n (94.80%), ii>r (2.81%)

- iii>n (90.91%), then single instances of iii>r, iii>l, iii>t, iii>d

- k>e+ (30.43%), k>ch (19.59%)

- *l>d (21.92%), l>ch (20.34%)

- *m>ch (23.49%), m>o (20.48%)

- *n>ch (21.25%), n>o (18.16%)

- *o>l (24.99%), o>k (16.53%)

- p>ch (51.23%), p>o (20.70%)

- q>o (97.11%), q>k (1.40%)

- *r>ch (24.82%), r>o (20.35%)

- *s>o (27.92%), s>a (23.38%)

- *Sh>o (46.61%), Sh>e+ (32.49%)

- *t>ch (30.87%), t>o (22.01%)

- *y>d (16.01%), y>ch (15.87%)

Contrastive bigram frequencies have long been used to distinguish Currier A from Currier B, but investigating transitional probabilities rather than absolute quantities may offer a productive alternative perspective on the differences between the two “languages.” For example, if we graphically compare the transitional probability matrices for e, ee, and eee—which seem to display a kind of continuous mutual trajectory as a “series”—we can see the vastly greater probability of a transition to d in Currier B play itself out in the context of other, competing transitional probabilities. This view complements the usual observation that the bigram ed is much more common in Currier B than Currier A.

Perhaps the most striking overall systemic difference we find with Currier A, though, is that instead of a qokeedy loop, there’s another, different closed loop:

- ch>o (45.67%)

- o>l (24.99%)

- l>d (21.92%)

- d>a (50.40%)

- a>i+ (51.96%)

- more specifically a>ii (39.24%; 75.52% within a>i+)

- ii>n (94.80%)

- n>ch (21.25%)

This default loop would generate the sequence choldaiincholdaiincholdaiin, which contains a strong breakpoint at n>ch, plus an ambivalent breakpoint at l>d, and so would predictably appear broken up into words either as choldaiin.choldaiin.choldaiin or chol.daiin.chol.daiin.chol.daiin. We could also express the same prediction as chol,daiin.chol,daiin.chol,daiin, using the convention of “comma breaks.”

Like the qokeedy loop, the choldaiin loop more readily permits escape at certain points than others. Here are the options we encounter while passing through the loop with at least a 10% probability.

- ch>o (45.67%); second option = ch>e+ (25.10%); third option = ch>y (14.69%)

- o>l (24.99%); second option = o>k (16.53%); third option = o>r (16.28%); fourth option = o>d (11.95%); fifth option = o>t (10.61%)

- l>d (21.92%); second option = l>ch (20.34%); third option = l>o (15.49%)

- d>a (50.40%); second option = d>y (26.73%)

- a>i+ (51.96%); second option = a>l (19.37%); third option = a>r (18.34%)

- more specifically a>ii (39.24%; 75.52% within a>i+); second option = a>i (11.87%; 22.84% within a>i+)

- ii>n (94.80%)

- n>ch (21.25%); second option = n>o (18.16%); third option = n>d (15.06%)

As with the qokeedy loop, by choosing single less-probable transitions we can generate familiar-looking sequences such as chor,chol,daiin, chy,daiin,chol, and cheol,daiin.

Torsten Timm has observed that the frequency of words in the Voynich Manuscript has an inverse correlation with the edit distance from three specific words: daiin, ol, and chedy. That is, the more similar to one of these three words another word is, the more frequently it tends to occur. He accordingly groups words into a daiin series, an ol series, and a chedy series based on which word each most resembles. If a word resembles two of these words, he finds that it tends to be even more common than if it resembles only one.

The three series in Timm’s model coincide closely with the two closed loops identified above. Timm assigns chol to the ol series, such that the chol and daiin series could be considered the two “halves” of the choldaiin loop. Meanwhile, he assigns qokeedy to the chedy series, such that the chedy series and the qokeedy loop appear to coincide as well.

One point that should give us pause for reflection is that the most common word Timm identifies in each series—daiin, ol, chedy—doesn’t always match the loop predicted by glyph affinities. There’s no discrepancy with daiin. But how might we account for Timm’s finding that there are 537 tokens of ol to 396 of chol, or that there are 501 tokens of chedy to 305 of qokeedy? The answer could lie in the fact that Timm is analyzing discrete words separated by spaces, while we’re instead analyzing glyph sequences continuously throughout lines. From the latter perspective, ol and chedy will often be fragments of longer sequences that differ from one another, such as rol and yol or lchedy and rchedy, but that end up excluded from the pool of words through frequent spacing as r.ol and y.ol or l.chedy and r.chedy, thanks to the presence of ambivalent breakpoints. By contrast, the sequence qokeedy has no ambivalent breakpoints, and the sequence chol has only the very weakly ambivalent o_l (I count fifteen tokens of cho.l and eleven of cho,l). Thus, I’m inclined to suspect that the higher frequencies Timm found for ol and chedy are, from the standpoint of glyph sequences per se, probably artifacts of spacing. A further circumstantial point in favor of privileging daiin, chol, and qokeedy is that these are the words in each of Timm’s three series with the highest quantities of adjacent exact repetitions:

- .daiin.daiin. (13)

- .chol.chol. (23) versus .ol.ol. (4)

- .qokeedy.qokeedy. (19) versus .chedy.chedy. (7)

Given the overlap between Timm’s series and my loops, it seems likely that Timm’s observations about edit distance from the daiin, ol, and chedy series would also apply to edit distance from the qokeedy and choldaiin loops. If so, then the frequencies of glyph sequences in the Voynich Manuscript would correlate with their adherence to the sequences generated by these two loops, with sequences that deviate less from them being more common, and with sequences that deviate more from them being less common. And if edit distance reckoned in Levenshteinian terms is also comparable in effect to choosing less-probable transitions (which I’ll admit isn’t guaranteed), that would suggest in turn that we’d be able to predict word frequencies based purely on a combination of transitional probabilities and spacing rules. I haven’t tested this hypothesis empirically, but it strikes me as a plausible extrapolation from Timm’s findings.

Moreover, if adherence to the qokeedy and choldaiin loops is a reliable predictor of the frequencies of glyph sequences, that would further support a model in which the default tendency of the script really is to repeat in an endless loop. Of course, much of the text doesn’t repeat conspicuously. When it doesn’t, the incidence of less-probable glyph adjacencies—or, if you will, the entropy relative to the loops—would seem to have risen above some hard-to-define threshold. But whenever such entropy drops below that threshold, we see default adjacencies come to the fore and yield repetitive-looking strings along the lines of qokeedy.qokedy, chor.chol, and daiin.dain. This latter behavior reminds me of nothing so much as an unmodulated carrier in telecommunications—or a minimally modulated one.

Now, as I admitted earlier, the first-order Markov model I’ve been describing may be a bit too simple. If we work out transitional probability matrices for whole bigrams rather than individual glyphs—for example, examining the glyphs that follow after ta rather than just a—we find that the first glyph of the bigram can sometimes make a big difference. In both Currier A and Currier B, for example, the transitions o>k and o>t overwhelmingly dominate after q, y, and n, but they’re less common than o>l, o>r, and o>d after most other glyphs. In Currier B, a transition from a glyph to y appears to be more probable if that glyph is preceded by e+. Such “exceptions” plainly demand attention.

And yet the closed qokeedy and choldaiin loops persist even if we advance to a second-order Markov analysis—that is, working out probabilities for the next glyph on the basis of two preceding glyphs rather than just one. If we start at qo in Currier B, and follow all the first options, we get:

- qo>k (61.91%)

- ok>e+ (43.85%)

- specifically ok>ee (23.69%; 54.03% within ok>e+)

- kee>d (45.10%)

- eed>y (86.55%)

- dy>q (35.55%)

- yq>o (97.88%)

If we start at cho in Currier A, we get:

- cho>l (31.05%)

- ol>d (22.15%)

- ld>a (54.22%)

- da>i+ (65.46%)

- specifically da>ii (49.37%; 75.42% within da>i+)

- aii>n (95.30%)

- iin>ch (20.94%)

- nch>o (44.41%)

And as before, if we start somewhere outside the prevailing loop, we still find ourselves drawn inexorably into it. If we start at qo in Currier A, and follow all the first options, we get:

- qo>k (48.13%)

- ok>e+ (32.53%)

- specifically ok>e (19.43%; 59.73% within ok>e+)

- ke>o (68.92%)

- eo>l (34.07%)

- ol>d (22.15%) — back in the choldaiin loop (via qokeol,daiin.chol,daiin….)

And if we start at cho in Currier B, and follow all the first options, we get:

- cho>l (34.27%)

- ol>k (22.62%)

- lk>e+ (46.37%)

- specifically lk>ee (27.01%; 58.25% within lk>e+)

- kee>d (45.10%) — back in the qokeedy loop (via chol,keedy.qokeedy…..)

The first-order Markov analysis laid out above might not be sensitive to all the nuanced patterns of Voynichese “word grammar,” but it does appear to capture some important patterning that holds up with higher-order analysis, and it has the practical advantage of simplicity. On those grounds, I’m going to stick with it for the moment in the interest of making my investigation of some further complicating factors more manageable.

One final point before I proceed. Some readers may be skeptical that mere transitional probabilities combined with spacing rules could produce the intuitively recognizable “word structure” of Voynichese. As a test, I’ve taken some of my calculated probability matrices and used them as a basis for generating random sequences in turn. Matrices derived from first-order Markov analysis seem to yield an implausibly high proportion of words containing more than one gallows glyph, as in this sequence based on matrices from Currier B:

qol.dy.dor.ol.Shey.or.Shokaiin.Shotalkar.chedy.Shy.chopcholkedy.Sheokeey.s.chcKhdy.chy.okeor.

odytey.odytodain.SheotShey.pchdy.keedy.dal.Shdy.Shetaiin.ol.ol.ody.dytchedy.qol.ol.Shekeedain.

Shedy.qol.l.chedy.dytar.olal.dy.qotey.qosal.cheokaiin.y.otchokchokeedain.cheey.y.pcholkar.Shar.

cheeotchedy.keedar.ain.cheeyty.Sheey.ol.chcKhoteey.l.dy.kaiin

When I use matrices from a second-order Markov analysis, though, I find my results beginning to match the expected structures and rhythms somewhat better. The following example is again based on matrices from Currier B.

ol.qokeodar.ar.okaiin.Shkchedy.Shdal.qotam.ytol.dal.cheokeedy.chkal.Shedy.qokair.odain.al.ol.daiin.

cheal.qokeeey.lkain.chcPhedy.kchdy.cheey.otar.cheor.aiin.Shedy.dal.dochey.opchol.okchy.Sheoar.ol.

oeey.otcheol.dy.chShy.lkar.ain.okchedy.l.chkedy.oteedar.ShecKhey.okaiin.chor.olteodar.okal.qokeShedy.

ol.ol.Sheey.kain.cheky.chey.chol.chedy

Although I generated these sample sequences randomly, I don’t mean to put them forward as evidence that the text of the Voynich Manuscript isn’t meaningful. Rather, I only want to suggest that Voynichese “word structure” could arise as a natural byproduct of transitional probabilities, and that it might be just as compatible with a continuous mode of encoding, glyph by glyph or bigram by bigram, as it is with one that operates on discrete words.

§ 2

Macroscale Analysis

The analysis I’ve presented so far has assumed that transitional probabilities are uniform throughout the text, except for the differences between Currier A and Currier B. But they’re not.

On one hand, there are more granular distinctions that can be drawn among sections of the manuscript. As I’ve mentioned, Quires 13 and 20 already seem to fit certain tentative predictions I’ve made for Currier B better than Currier B text does as a whole. I agree with others that the two “languages,” far from being neatly separable, are better understood as occupying regions of a spectrum. When it comes to developing the ideas I put forward in the previous section, I suspect the first impulse of many Voynichologists would be to investigate transitional probabilities within specific quires or other narrower groupings of folios that are recognized as sharing more than the usual share of features in common. That would certainly be worth doing. I can say, for a start, that Quires 1-3 (a contiguous block of “Herbal A”) independently display the choldaiin loop, while Quires 13 and 20 (two different blocks of Currier B) both independently display the qokeedy loop. By contrast, Quire 19 (a block of “Pharma A”) displays a weaker ol loop into which all other glyphs tend to be drawn, but from which escape is also very likely:

- o>l (32.98%)

- l>o (20.63%)

If we were to discard the analytical distinction between the Currier A and Currier B “languages,” as has been discussed, we might do worse than to substitute Choldaiinese, Qokeedyese, Olese, and maybe other loop-defined groupings yet to be discovered—perhaps with more nuanced subdialects of each.

But transitional probabilities—and other related features—also vary by position both within lines and within paragraphs. I’ve taken to calling the two parameters in question rightwardness and downwardness, such that phenomena can be more leftward or rightward in lines and more upward or downward in paragraphs. It’s this other kind of variation I’d like to explore here next.

A few positionally variable factors have long been recognized. For example, the probability that the first glyph in a paragraph will be p is extremely high (around 45%), and p and f appear far more often in the first lines of paragraphs than anywhere else. Similarly, m and g appear far more commonly as the last glyph in a line than in any other position. Like “word structure,” these last-mentioned patterns are easy to spot even through casual browsing. From the standpoint of “word grammars,” such phenomena are typically regarded as exceptions, prompting such questions as whether the first words of paragraphs are “normal” words with p prefixed to them. But if we shift our focus away from words and towards continuous glyph sequences, these patterns can be seen as entirely regular—just with respect to a different, larger-scale frame of reference.

To accommodate the issue of positional variability, I’ve experimented with defining different quantities of positional category within lines, such as these—

- A: The first adjacency in the line

- B: The first third of the line (excluding first and last adjacencies)

- C: The middle third of the line (excluding first and last adjacencies)

- D: The last third of the line (excluding first and last adjacencies)

- E: The last adjacency in the line

—and also different quantities of category by paragraph position, such as these:

- 1: The first line of the paragraph

- 2: The remainder of the first half of the paragraph

- 3: The second half of the paragraph, excepting

- 4: The last line of the paragraph

A framework of this sort enables us to compare and contrast phenomena of interest within each of the combinations of line position and paragraph position (e.g., A-1, A-2, A-3, A-4, B-1, B-2, B-3, B-4, etc.) as well as for each of the line positions for all paragraph positions (e.g., A:E-1, A:E-2, etc.) and each of the paragraph positions for all line positions (e.g., A-1:4, B-1:4, etc.). It maps information onto a grid like the following one, in which squares in columns A and E and rows 1 and 4 are especially likely to represent sets of unlike size from the others.

I’m unsure what the optimal number of divisions is for lines and paragraphs. Is it best to divide the line into three parts, as here, or into four quarters, or five fifths, or seven sevenths? Would it be better to divide the paragraph into three thirds than into two halves, so that we could assess the “middle” third? The main advantage of increasing the number of divisions is enhanced precision and a greater ability to distinguish continuous trends. The main disadvantage is the reduction of the available dataset within each division, which amplifies the effects of random noise. There’s probably a sweet spot to be found here through trial and error, and this may become more clear over time than it is right now.

One of the simplest things to track in this way is glyph distribution: all we need to do is count the glyphs in each section of the line. I designed my script mainly with transitions between glyphs in mind, though, so it divides the line into segments in terms of those, as described above, rather than in terms of the glyphs themselves. When it comes to counting the first glyphs of bigrams, which are my primary point of reference, the first segment of the line contains the first glyph of the line (preceding the first transition), the last segment of the line contains the second-to-last glyph of the line (preceding the last transition), the other groups represent fractions of the stretch from the second glyph through the third-to-last glyph, and the last glyph of the line isn’t tracked at all. However, I also keep an extra count of the second glyphs of the bigrams—the glyphs that are transitioned to—so by analyzing those as well, we can regain the lost symmetry.

As I mentioned above, it has long been known that the distribution of some glyphs varies by position. The observation that certain glyphs are disproportionately rare or common at the beginnings or ends of lines, or disproportionately common in the first lines of paragraphs, dates back to Prescott Currier:

The frequency counts of the beginnings and endings of lines are markedly different from the counts of the same characters internally. There are, for instance, some characters that may not occur initially in a line. There are others whose occurrence as the initial syllable of the first ‘‘word’’ of a line is about one hundredth of the expected…. There is…one symbol that, while it does occur elsewhere, occurs at the end of the last ‘‘words’’ of lines 85% of the time…. [The symbols p and f] appear 90-95% of the time in the first lines of paragraphs, in some 400 occurrences in one section of the manuscript.

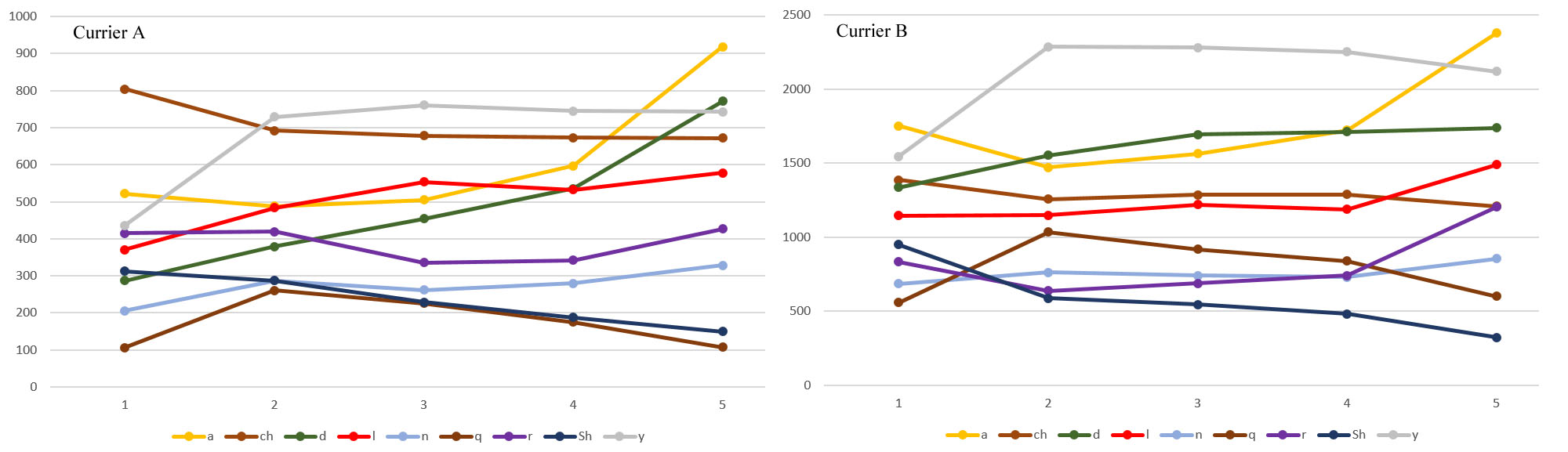

Patterns such as Currier mentioned could perhaps be explained in terms of superficial tweaks to text that is otherwise uniform in character: elaborating t and k into p and f in the first lines of paragraphs, extending n into m at the ends of lines, and so on. However, I believe evidence has been mounting that glyphs can also display continuously variable distribution throughout lines and paragraphs, which makes the variation rather harder to segregate neatly from the rest of one’s analysis. Here’s a graph I posted to the Voynich.Ninja forum showing counts for some common glyphs in each of five “internal” line positions based on the line-division strategy described above—my first crude attempt at plotting data of this kind.

Here are a few of the specific sets of five numbers from the same dataset for Currier A:

- s: 92, 134, 139, 185, 204

- ch: 804, 692, 678, 673, 672

- Sh: 312, 287, 229, 187, 149

- d: 287, 379, 454, 536, 771

Even without a graph, it’s easy to see here that the counts for s and d go continuously up, while the counts for ch and Sh go continuously down, and that the rates of change also differ from case to case.

My first impulse was to calculate single rightwardness and downwardness “scores” for glyphs, bigrams, words, and such to reflect their overall average inclinations rightward or leftward and upward or downward. But I’ve since tried applying some more elaborate visualization strategies to this kind of data, and it’s becoming increasingly apparent that the distribution of many Voynichese glyphs varies according to patterns that have shapes as well as directions.

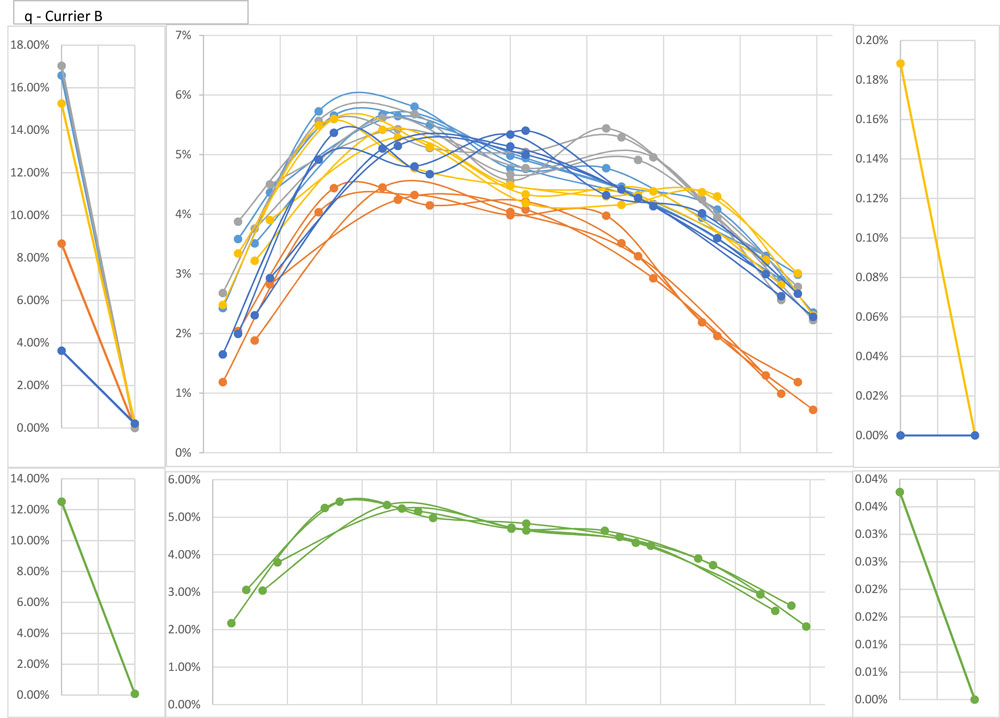

Here’s one technique I’ve been using. I divide paragraphs into five sectors—1 = first line, 5 = last line, and 2-4 = whatever remains in the middle, divided into thirds—and I simultaneously divide lines into fifths (for a first analysis) and sevenths (for a second analysis), both times splitting off the first and last adjacencies as well. This arrangement maps positions onto a 2D grid along the lines laid about above, 5×7 in the one case, 5×9 in the other. For each of the eighty groupings that result, I tally the first glyphs and second glyphs of each adjacent glyph pair. This may seem like a clunky approach, but by combining the two counts I can get totals for all glyph tokens in first position, second position, last position, and second-to-last position, as well as a pair of totals for each intermediate group with their “windows” offset by just one position. Finally, I divide each result by the total count in its cell of the grid to normalize the figures for group size. Then it’s time to take a look at what we’ve found. On the left of my display, I plot the results for the first two positions; on the right, the results for the last two positions; and in the middle the results for all the intermediate positions, with the fifths and sevenths interleaved, and with the results of the “first glyph” and “second glyph” counts offset by a slight, arbitrary distance along the x axis. Each of the four interleaved series of plot points (first glyph with five divisions, second glyph with five divisions, first glyph with seven divisions, second glyph with seven divisions) is connected by a separate line. On top, I show the results for each separate paragraph sector color-coded in a way that’s meant to suggest a blue-to-red spectrum: (1) dark blue for first lines, (2) light blue for first thirds, (3) gray for second thirds, (4) yellow-orange for last thirds, and (5) red for last lines. On the bottom, in green, I show the aggregate values for all paragraph sectors lumped together.

With all that explanation out of the way, let’s look at some results.

A good example of a glyph with a distinctly-shaped distribution is q. Here’s a display of data for it from Currier B, showing a characteristic double hump with a higher peak on the left. The color-coding may be more challenging to take in visually than the curve-shape is, but the red (last lines) and dark blue (first lines) sort lower than everything in the middle; the average percentages for the five paragraph sectors are 3.87, 4.55, 4.62, 4.32, and 3.09. Thus, q seems to gravitate towards the middle—or to shy away from the extremities—in both lines and paragraphs.

Such convergent tendencies within lines and paragraphs don’t seem to be the rule, but another possible case is the distribution of Sh in Currier A. The aggregate (green) curve below shows a clear downward slope in prevalence across lines, from around 4.5% to around 1.5%. Meanwhile, the color-coding indicates that Sh is more prevalent in the first lines of paragraphs (dark blue), and less prevalent in the last lines of paragraphs (red), than anywhere else. It’s true that the downward trajectory isn’t wholly consistent in between (average percentages for sectors are 4.81, 2.74, 2.61, 3.03, 2.13), but for the most part, it seems that Sh becomes less common the further we go along in a line or in a paragraph, rightward and downward.

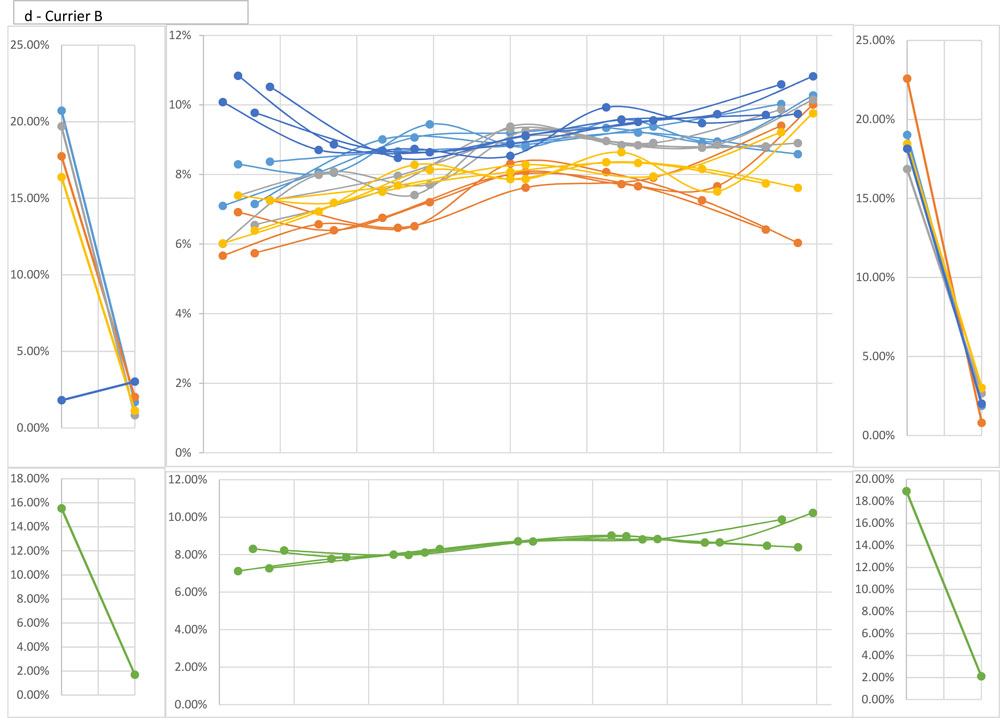

Here’s a similar graph for d in Currier B. This time, the aggregate (green) curve shows comparatively little variation within lines, but prevalence decreases with striking consistency across paragraphs; average percentages for the five sectors are 9.34, 9.18, 8.72, 8.13, and 7.93.

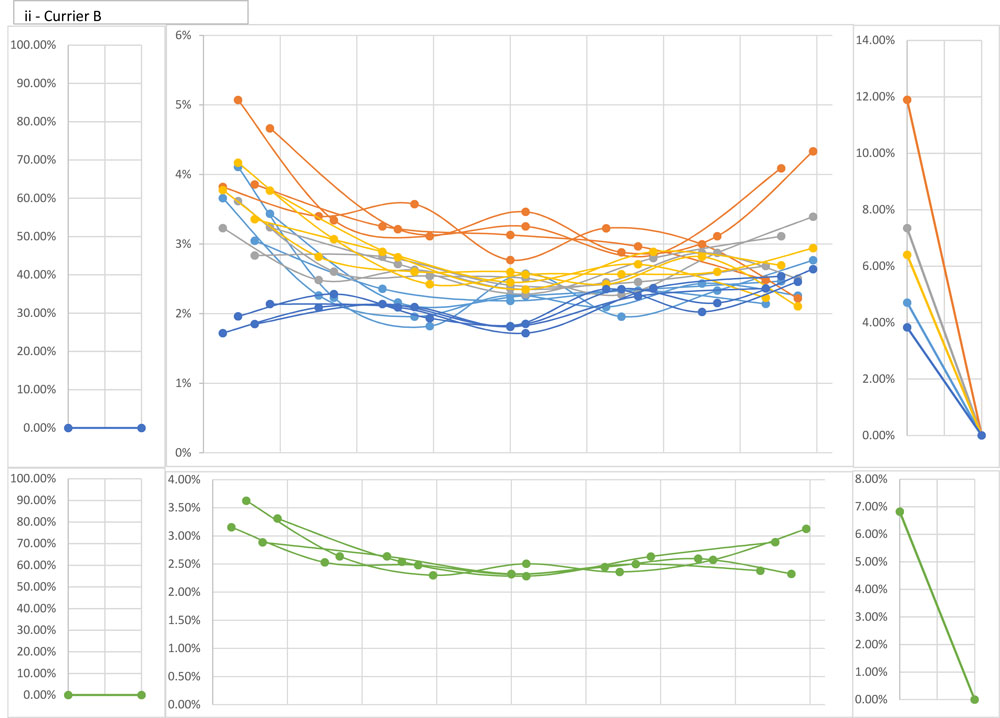

Meanwhile, ii shows the exact opposite trajectory in Currier B; average percentages for the five paragraph sectors are 2.08, 2.40, 2.69, 2.74, and 3.33. The pattern for i is similar (average percentages 1.70, 1.87, 1.93, 2.07, 2.28) but harder to make out visually because of the smaller differences, so that I overlooked it at first. For what it’s worth, neither pattern is evident in Currier A.

Those are just a few out of many similar graphs I could have chosen. Most glyph types turn out to have their own unique patterns of distribution within whole lines and paragraphs, just as surely as they prefer to be adjacent to certain other glyph types. While this phenomenon has only just started to be recognized and investigated, it’s one with which I suspect any theory about how the Voynich Manuscript works will ultimately need to come to terms.

One response to this widescale positional variability would be to posit that there are separate microscale and macroscale factors at play in the structure of Voynichese. By microscale factors, I mean the ones that govern the level of transitions between individual glyphs. The conspicuous formal regularity on this level has inspired many studies of “word structure,” as well as my own line of speculation above regarding the qokeedy and choldaiin loops, and the relevant rules for valid words seem, at least on the surface, to apply fairly uniformly. By contrast, macroscale factors would concern the preferential, non-uniform ways in which glyphs are distributed with respect to whole lines and paragraphs. If microscale analysis addresses the question of why the glyph y appears in one particular place in one particular word, macroscale analysis might instead address the question of why it appears where it does on the page.

It might seem at first glance as though a wide gulf separates those two levels of analysis, with no clear path to get from the one to the other. But I think the approach I began developing in the preceding section could help us bridge them, at least as far as the mechanics of analysis go. If the distribution of a glyph varies by position, the aggregate probabilities of transition to that same glyph must necessarily also vary by position—there’s no way, statistically, that it could be otherwise. To accommodate that reality, we could simply qualify our transition probability matrices to stipulate that the probability of, say, l>d isn’t uniform for all line positions but varies as a function of them, so that the macroscale dynamics are integrated into the microscale dynamics, and vice versa.

I’ll admit that “simply” may be a bad choice of word; this is a conceptually simple step, but not necessarily simple to put into practice. (Jorge Stolfi’s “word grammar” could perhaps be adapted similarly by adding one or more positional dimensions to his frequency statistics, if anyone would like to try.) I’ll also admit that this arrangement, in itself, can’t explain anything. It only provides a mechanism for organizing statistical data so that we can look for patterns in it. What those patterns might mean is another matter entirely.

By way of a start, let’s take a look at how positional variability impacts the two closed loops that emerged from our earlier analysis: the choldaiin loop in Currier A and the qokeedy loop in Currier B. Whatever conclusions we might end up drawing about the loops as such, I believe it’s fair to treat them as representative test cases for the microscale structure of Voynichese. I can’t imagine how any factor powerful enough to disrupt them wouldn’t also disrupt the structure as a whole, regardless of whether we analyze it through transitional probability matrices or a “word grammar” such as Stolfi’s.

The two weakest links in the choldaiin loop are l>d (21.92%) and n>ch (21.25%), and if we look at these transitions within Currier A more closely, we discover that each of them actually dominates only in a certain limited part of the line. For most line positions, it turns out that l>ch is actually more probable, but at the very end of the line, l>d becomes so overwhelmingly strong as to tip the overall statistics for the whole line in its favor. The following graphs exclude the first and last transitions of lines to let us focus on the “internal” situation.

Meanwhile, n>ch dominates through much of the early-to-middle part of the line, but n>o is more probable right at the beginning and—as n>ch starts to decline—becomes more competitive again, while n>d rises steeply to overtake both towards the very end.

Since the line segments in which l>d and n>ch dominate don’t significantly overlap, there doesn’t actually seem to be anywhere the choldaiin loop as a whole would pertain. This loop turns out to be even more of an abstraction than I’d thought. The first half of the line would hypothetically loop chol,chol,chol by default, while the last third of the line would loop daiin.daiin.daiin. On that basis, it might be more defensible to think in terms of separate chol and daiin loops that can either repeat independently or alternate with each other (which would end up conveniently mirroring Timm’s identification of separate ol and daiin series of words).

If we compare the major Currier A transitions for l and n by paragraph sector rather than by line position, we find variation on that front as well.

On the left, we see that l>d holds an early lead and maintains its absolute level fairly steadily, but that l>ch rises to overtake it by the middle third of the paragraph. On the right, we see that n>o dominates the first lines of paragraphs, but that n>ch dominates everywhere else, and more strongly the further downward in a paragraph we go. The transitions l>Sh and n>Sh turn out to be especially strong in the first lines of paragraphs as well, and to decline in what looks like inverse proportion to the rise of l>ch and n>Sh. Thus, the constituent probabilities of the choldaiin loop vary by position within paragraphs as well as within lines. Curiously, n>ch and l>ch both become less dominant as we move rightward in lines but more dominant as we move downward in paragraphs. In other words, they don’t show any one trajectory as we move “forward” in the text, but two mutually contradictory ones.

The next weakest link in the choldaiin loop after l>d and n>ch is o>l (24.99%), but it turns out to be a lot more resilient with regard to positional variation. It consistently maintains its first-place status across all line positions, although its distance from o>r and other competitors varies.

The situation is similar with variation by paragraph sector: there are some notable differences among transitional probabilities from o, such as the unusually low proportion of o>k in the first lines of paragraphs, but o>l holds its lead everywhere.

You may recall that Quire 19 (“Pharma A”), analyzed as a whole without factoring in positional variation, displays an ol loop rather than a choldaiin loop. The only refinement in the general characteristics of Currier A needed to bring that about would be an increase in the probability of l>o relative to l>ch and l>d—an exaggeration, perhaps, of the relative trajectories we see in the middle of the line, where l>o is most competitive. We would then have Olese rather than Choldaiinese.

How about the qokeedy loop? Every one of its links is stronger than l>d, n>ch, and o>l in the choldaiin loop. However, its weakest two links are y>q (28.11%) and o>k (30.31%), so let’s take a quick look at those. First, y>q is consistently dominant throughout the line in Currier B, even if y>o begins to approach it in probability towards the very end.

Meanwhile, o>k dominates every position except for the very beginning, where o>l has a slight edge over it.

There’s also some variation by paragraph position in these same transitions from y and o, particularly in the first and last lines, but we don’t see the complex crossovers we did for transitions from l and n in Currier A.

There’s also some variation by paragraph position in these same transitions from y and o, particularly in the first and last lines, but we don’t see the complex crossovers we did for transitions from l and n in Currier A.

On the whole, the qokeedy loop seems to pertain more consistently in Currier B than the choldaiin loop does in Currier A.

Unsurprisingly, the patterns of transitional probability we’ve been examining bear at least some passing resemblance to the patterns of distribution of the individual glyphs the transitions are to. We know, after all, that these phenomena must relate to each other somehow. But it’s worth asking just how closely the two patterns resemble each other. The answer seems to vary from case to case.

Consider l>d in Currier A: its rise to dominance late in the line coincides with a jump there in the proportion of total glyphs made up by d itself. In other words, l>d becomes more probable as d becomes more common. Meanwhile, l>ch also becomes less probable as ch becomes less common. If we divide the line into five analytical segments and plot the probability of l>d against the proportion of second glyphs that are d, and the probability of l>ch against the proportion of second glyphs that are ch, we find that the resulting plots come out impressively straight, just as we’d expect from a close linear correlation.

The gradients both look to be in the ballpark of 1:5, which is to say that a 1% change in the overall share of the glyph in the mix seems to correspond to a 5% change in the probability of the relevant transition. Given that Voynichese glyphs prefer so strongly to transition to other specific glyphs, it might not be strange to see a change in glyph distribution have an amplified effect on an associated transitional probability.

If we split the line into seven analytical units instead of five, we turn up more chaotic back-and-forth around positions 4 and 5, right where l>d is overtaking l>ch in probability, but the overall directionality is similar. It’s notable, too, that multiple points cluster closely together: 1, 2, 3 and 4, 5 in the plot for l>d versus d and 2, 3, 5 (but not 4) in the plot for l>ch versus ch, as though the possibilities are somehow quantized and can only jump between discrete values when some kind of tipping point is reached. (This phenomenon was also noticeable to a lesser degree with just five line divisions: note the apparent clustering above of positions 1 and 2 for both l>d and l>ch, and of positions 3 and 4 for l>ch.)

But other cases aren’t as tidy as this one. For n>ch, we’d need to make a three-way comparison with both n>o and n>d, and o, you may recall, is one of those glyphs we know isn’t handled well by our first-order Markov analysis in the first place. Here’s what we find:

My graph’s y axis is more compressed this time than before, so the gradients are actually more similar than they look to those we saw above. And if we consider just the displacement between line positions 1 and 5, the change in the transitions is roughly what we’d expect from the change in frequency of the associated glyphs. Points 1, 2, and 3 for n>d and n>o, and points 1 and 2 for n>ch, also seem to show the same “clustering” phenomenon we observed earlier. But we don’t quite see the steady, progressive slope we see when we plot l>d against d. Of course, we’re only considering rightwardness here, and not downwardness; maybe taking the two together would help. But it looks as though variation in transitional probabilities might not always just recapitulate overall variations in the distribution of individual glyphs. At least, I can’t reliably predict the one from the other to my satisfaction just yet.

Still, I believe it’s at least safe to say that positional constraints work on smaller units of text than words, regardless of whether those smaller units prove specifically to be glyphs or transitions or bigrams or some combination thereof. Since positional tendencies implicate such granular details as whether n is more likely to transition to ch, d, or o, or how likely d is to occur in general, they must plainly be operating at the sub-word level. To the extent that words also show patterns of variable distribution by position, that’s presumably because they’re built up from the results of smaller-scale processes on which positional factors exert a more direct influence.

Let’s next examine a few transitions from a slightly different angle, using a display more like the one I used earlier for examining glyph distributions. (Just getting a good look at what’s going on often feels like half the battle!) The graphs below show transitional probabilities for o>t, o>k, l>y, and d>y color-coded by paragraph sector as before, with line positions assigned to the x axis (1=first transition, 9=last transition, and 2-8=seven intermediary segments), and with statistics for Currier A on the top and Currier B on the bottom.

There’s plenty to see here, but for the moment I’d like to draw out just a few specific observations. First, o>k is less probable in the first lines of paragraphs—plotted in dark blue—than anywhere else, while o>t tends by contrast to be more probable there than it is overall. Second, o>t and o>k often display conspicuous peaks at the first transition in the line—position “1” on the x axis—though not always. Third, l>y and d>y are both more probable as the last transition in a line than anywhere else, but l>y more extremely so than d>y, and with the discrepancy between the two being greater in Currier B than in Currier A.

The reason I’ve singled out these four specific transitions for consideration here is that they also happen to be characteristic of the most common “label” words—that is, self-standing glyph sequences that occur outside the context of lines and paragraphs. The very existence of labels could perhaps be seen as undermining any line of speculation centered on positioning within larger-scale structures, since labels don’t seem to belong to such structures and might be taken as proof that Voynichese can function perfectly well without them. But only certain specific sequences with a remarkably consistent structure can be found appearing more than three times as labels:

- 8 tokens: otaly, otedy

- 6 tokens: okal, okary, oky

- 5 tokens: okaly, okeody, okol, otchdy

- 4 tokens: okedy, okody, oteedy, otol, otoly

All fourteen of these sequences begin with ot or ok, while nine of them end with ly or dy (r>y, as in okary, is also overwhelmingly more probable at the ends of lines than elsewhere).

Some of these sequences aren’t particularly common in paragraphic text. Disregarding word breaks, I count 5 tokens of otaly in Currier A and 21 in Currier B; of okaly, 17 and 29; of okary, 2 and 12. From the standpoint of “word morphology” and overall word frequencies, then, the frequent appearance of these distinctively-structured words as labels would present something of a mystery.

However, if we consider these label sequences not as words but as unusually short lines, they conform somewhat better to expectations. As we’ve seen, the transitions l>y and d>y are disproportionately common at the ends of lines, just as they are at the ends of these labels. The situation with o>k and o>t is less clear-cut, but both transitions display conspicuous beginning-of-the-line peaks under at least some circumstances, much as they appear conspicuously at the beginnings of these labels. In the context of ordinary lines, o>k or o>t might tend not to appear often in close proximity to l>y or d>y or r>y because a number of middle-of-the-line glyphs usually separate them. But within the smaller compass of a label, the same positional constraints might make their appearance together far more likely. I offer this as one intriguing hint that the same forces that shape the macroscale structures of lines and paragraphs might apply to labels as well.

One more phenomenon that seems to vary by position is spacing. I haven’t analyzed this as thoroughly as I could, although some of my Python scripts distinguish between spaced, unspaced, and comma-spaced variants, in addition to giving the totals of all these, which quadruples the already-bloated size of the spreadsheets. For now, I’ll content myself with sharing statistics for just one of the glyph adjacencies we’ve just been looking at, l_y. The figures below give the token quantity apart, with a comma (i.e., uncertain), and together, and then the “apart” count as a percentage of the “together” count.

- 1st adjacency: unattested

- 1st third of line: Currier A = 14, 2, 20, 70%; Currier B = 15, 3, 13, 115%

- 2nd third of line: Currier A = 26, 0, 42, 62%; Currier B = 26, 5, 53, 49%

- 3rd third of line: Currier A = 17, 2, 50, 34%; Currier B = 18, 2, 51, 35%

- Last adjacency: Currier A = 2, 2, 146, 1.3%; Currier B = 0, 3, 123, 0%

Judging from these figures, the probability of a space being inserted between l and y isn’t uniform but decreases steadily over the course of a line. We might hypothesize that this pattern reflects the writer tending to run out of room towards the ends of lines and trying to cram glyphs together more closely there, but I doubt that’s the cause. In an earlier study I made only of the total average “rightwardness” of spaced and unspaced variants, I found similarly that ly is more rightward on average than l.y is, but that for some other glyph pairs, it’s the spaced variant that’s more rightward on average—that is, a space apparently becomes more likely to be inserted between them the further along in a line they appear. And these differences display distinct patterns of their own, so they don’t seem to represent mere statistical noise.

There’s one more point I should make before moving on. When I look for an aggregate pattern that “should” be more common in one part of a line than another, based on the foregoing analysis, it tends not to be. But the usual entropy of the writing may be great enough overall to “drown out” the effects of the patterns on the level of individual lines and words. That’s not a bad thing. I hold out hope that the entropy will prove to be the meaningful part.

§ 3

Intermediate-Scale Analysis

As I acknowledged earlier, first-order Markov modeling doesn’t accommodate all the detectable patterns of Voynichese. Second-order Markov modeling seems to fare better, and third-order Markov modeling better yet. But the first-order modeling already captures so much of the patterning that ratcheting up our analysis in this direction seems more likely to refine its findings than wholly to overturn them. Transitional probabilities often seem to depend on the single glyph that immediately precedes the transition. If it seems they sometimes don’t, it’s worth looking closely at the “exceptions” to see whether they share any characteristics in common.

It remains an open question, of course, whether the “exceptions” are due to characteristics of the script itself or of content to which the script has been applied. Especially if the text is meaningful, its patterning shouldn’t be entirely predictable. In particular, I don’t believe the goal of a model should be to predict all attested words as valid and to reject all non-attested words as invalid. Given how many words or sequences in the Voynich Manuscript are unique, appearing only once, I’d argue that any plausible model ought also to predict words and sequences that would have been unique if they had appeared—or that might have appeared only on one of its missing pages. In Stolfian terms, it should also predict the occasional word with multiple core letters, multiple coremantles, or other “anomalous” features, since these are demonstrably admissible even if they’re comparatively rare. On this level, first-order Markov modeling might actually be perfectly satisfactory. Where it falls short is in predicting certain relative frequencies: for instance, the ratio between the token quantity of qok and the token quantity of qor. To be fair, that’s not something most “word grammars” tend to be able to do (or to try to do) either. But since the whole transitional-probabilities approach is based on relative frequencies in the first place, its validity might be held to stand or fall on that basis more than the validity of other approaches would.

To study the situation more methodically, I calculated transitional probability matrices for bigrams, as well as for just the second glyphs in the context of bigrams (e.g., or> vs. *r>, where * represents the first glyph in a bigram, excluding line-initial r>). I also made a similar comparison of transitional probability matrices for trigrams with those of their concluding bigrams (e.g., dol> vs. *ol>); and for quadrigrams with those of their concluding trigrams (e.g., doky> vs. *oky>); and similarly for quinquegrams, sexagrams, and septagrams. If needed, I could continue the same process indefinitely, but by the time we hit septagrams, we’ve already reached the length of the qokeedy loop and exceeded the length of the average word.

This approach makes it easy to detect cases in which earlier glyphs appear to correlate more strongly with subsequent transitional probabilities than the glyphs that immediately precede the transitions do. For example, if we focus on Currier B (as I will for the examples that follow until further notice), we find:

- *d>a: 24.57%

- Much less probable: eed>a: 8.15%, ed>a: 11.45%

- Less probable: chd>a: 21.88%, Shd>a: 22.56%

- More probable: od>a: 34.83%

- Much more probable: ld>a: 50.88%, yd>a: 66.88%, nd>a: 72.67%, rd>a: 72.73%

And also:

- *d>y: 66.73%

- Much less probable: nd>y: 11.05%, yd>y: 15.42%, rd>y: 17.05%, ld>y: 37.17%

- Less probable: od>y: 52.42%, Shd>y: 64.02%

- More probable: chd>y: 69.71%

- Much more probable: eeed>y: 83.10%, ed>y: 83.59%, eed>y: 86.55%

It’s evident here that the likelihood of a transition from d to y or a has a strong correlation with the glyph that precedes the d. Overall, the transition d>y is a little more than two and a half times more probable than d>a. However, after n, y, r, and l, d>a is actually more probable than d>y, while after e, ee, and eee, the probability of d>y exceeds that of d>a by much more than usual. This latter shift is also found occurring in transitions from numerous other glyphs: ch>y, cKh>y, cTh>y, k>y, s>y, Sh>y, and t>y all become significantly more probable after e+.

Here’s another pair of related cases:

- *o>r: 8.93%

- Much less probable: qo>r: 0.57%

- Less probable: no>r: 5.16%, yo>r: 5.83%

- More probable: eo>r: 10.91%, eeo>r: 11.73%, ro>r: 13.81%, cho>r: 14.78%

- Much more probable: Sho>r: 17.05%, so>r: 18.56%, to>r: 19.27%, lo>r: 19.57%, ko>r: 22.78%, do>r, 24.82%

And:

- *o>k: 30.43%

- Much less probable: eeo>k: 2.30%, ko>k: 2.85%, to>k: 4.32%, eo>k: 5.42%, so>k: 7.78%, do>k: 7.91%, Sho>k: 9.09%, cho>k: 10.45%

- Less probable: lo>k: 16.81%, ro>k: 19.10%, no>k: 28.91%

- More probable: yo>k: 32.31%

- Much more probable: qo>k: 61.91%

This case resembles the last one: we can see that the glyph that precedes the o strongly affects the likelihood of a transition to r or k afterwards. After q, o>k is overwhelmingly more probable than o>r, and it’s also much more probable after n and y. But after most other glyphs, it’s the other way around: o>r is more probable than o>k. Nor are these isolated cases; *o>t behaves much like *o>k, and *o>l much like *o>r.

Here’s yet another related pair of cases:

- *y>k: 5.90%

- Less probable: dy>k: 2.87%, eey>k: 3.82%

- More probable: ey>k: 6.68%, chy>k: 7.85%

- Much more probable: ly>k: 17.20%, ry>k: 26.67%, ny>k: 38.24%, yy>k: 44.64%

And:

- *y>t: 4.67%

- Less probable: dy>t: 2.41%, eey>t: 2.99%, ey>t: 4.18%

- More probable: chy>t: 5.52%

- Much more probable: ly>t: 11.83%, ry>t: 26.06%, ny>t: 33.53%, yy>t: 35.62%

This time it’s the similarities between the two cases to which I want to draw attention rather than the differences. The glyphs preceding y line up in identical order when ranked according to the probability of a subsequent transition from the y to k or t: namely, d, ee, e, ch, l, r, n, y.

Let’s now consider a few different explanations for why a first-order Markov analysis of Voynichese might fall short in its predictions, and the implications of our findings so far for them.

- Voynichese is encoded continuously in a way consistent with transitional probability matrices, but certain bigrams, such as ok or qo, behave as distinct and inseparable elements rather than as combinations of their parts.

- Voynichese has a significant “word structure” after all, or at least a cyclical structure, such that the probability of k or r following after o varies based on which slot in the cycle o itself fills.

- Voynichese is encoded continuously in a way consistent with transitional probability matrices, but glyphs can sometimes affect transitions beyond the ones that follow immediately after them.

I believe the first of these hypotheses is the least promising of the three based on the patterns we’ve examined so far. The most notable discrepancies have appeared to involve:

- A correlation between y, q, or n and an increased probability of transition to a gallows glyph two positions later.

- A correlation between e+ and an increased probability of transition to y two positions later.

So, for example, when n precedes either y or o, we find that the y or o is more likely in turn to transition either to k or t. If bigrams such as ny or yk were behaving entirely as distinct and inseparable units, rather than as combinations of n+y and y+k, it seems to me that we wouldn’t expect their pieces to display such individually consistent correlations. As it stands, even when the probabilities of transition from one glyph vary greatly depending on the glyph that precedes it, the options still seem to follow fairly set patterns. The first choice after o is usually either k or l, and if it happens to be something else—such as d after eo and eeo—it’s generally still a reasonably high-ranking choice in other cases too.

Hypotheses two and three both seem tenable at this point, but for the moment I’m mainly going to pursue hypothesis number three, in part because it seems more consistent with the path we’ve followed here so far. I believe that one of the strengths of the transitional-probability approach is that it predicts cyclical behaviors—such as the qokeedy loop—without requiring them to be defined a priori as a point of reference. We’ve already seen how much of the basic “word structure” could emerge naturally through the interplay of smaller-scale rules operating on a glyph-by-glyph basis. I’d like to continue looking for models that involve the fewest and simplest rules, and that center on dynamics by which structures could emerge rather than on outlining specific structures as such.

With that in mind, I’d like to propose a theory of delayed influence, beginning with the conjecture that a glyph can exert an influence not only on the transition immediately following it, but on the transition after that as well, complementing the influence of whatever glyph intervenes. According to this theory, after the sequence ny—for example—the effective transitional probability depends partly on the y, with its matrix of probabilities for a glyph that immediately follows, but partly also on the n, with its separate matrix of delayed probabilities for a glyph two positions ahead.

It’s cumbersome to investigate patterns of delayed influence on a case-by-case basis as I’ve been doing, so I’ve made an effort to come up with methods of detecting them more efficiently.

Here’s one approach I’ve tried, which has its weaknesses but still seems useful and informative. For each transition from a given bigram (e.g., ny>k), I count both the occurrences of the specific transition and the quantity of all transitions from that same bigram (e.g. ny), and I also calculate the transitional probability for just the latter half of the bigram (e.g., *y>k, where *y represents a bigram of which y is the second element, excluding line-initial y). I then multiply the transitional probability matrix for the latter half of the bigram (e.g., *y>k) to the count of tokens of the bigram (e.g., ny), thereby yielding a prediction of the number of tokens of the transition (e.g., ny>k) we should expect if the first element in the bigram (e.g., n) had no influence on the transition. There are 170 transitions from ny and 65 occurrences specifically of ny>k, while the probability of *y>k is 5.90%. If the n had no influence on the transition ny>k, and if that transition behaved like *y>k in general, we would expect there to be about 170×0.0590=10.02 occurrences of it, i.e., just over ten. Instead, there are 65—around six and a half times as many as predicted. So far, this just confirms the discrepancy I cited above: ny>k has a probability of 38.24%, about six and a half times the generic probability of *y>k. But I can now also select all transitions of the form n*>k, tally the actual counts and the predicted counts, and compare the sums to see if there’s any significant aggregate difference for the whole set. In doing this, it’s important to include counts of zero for any attested transitions **>k that never appear as n*>k, as well as the non-zero predictions for those same transitions. With that measure in place, I’ve confirmed that the sums of the actual occurrences equal the sums of the predictions, so my algorithm at least seems to be doing what I meant for it to do (which I try never to take for granted).

For n*>k, the sum of all actual occurrences is 500, and the sum of all predictions (rounded to the nearest integer) is 425. Thus, n appears on average to boost the probability of a transition from the glyph that follows immediately after it to k to ~118% of its usual level. However, the magnitude of the boost varies a lot from case to case, and in one instance, no>k, there’s actually a slight decrease.

- nch>k: 31 actual, 13.77 predicted (~225%)

- nSh>k: 13 actual, 6.72 predicted (~193%)

- no>k: 364 actual, 383.10 predicted (~95%)

- ny>k: 65 actual, 10.02 predicted (~649%)

- nl>k: 19 actual, 7.58 predicted (~251%)

One complication may be that the figure for no>k—for example—is included in the figure for *o>k on which the prediction for no>k is based in turn, so that the influence from n could already be contaminating our point of reference. To avoid that, we could always predict a token quantity for no>k based on a probability calculated for all *o>k except no>k. But I’ve tried that “fix,” and the results aren’t much different. And maybe I shouldn’t have expected them to be. After all, a further problem it doesn’t address is that other glyphs, such as y, seem to exert a similar influence to that of n, so that even if we remove all n*>k from our calculation of probabilities for **>k, transitions from bigrams beginning with those other glyphs, such as y*>k, remain and could skew overall results:

- ych>k: 28 actual, 17.4 predicted (~161%)

- ySh>k: 17 actual, 7.97 predicted (~213%)

- yo>k: 632 actual, 595.18 predicted (~106%)

- yy>k: 104 actual, 13.74 predicted (~757%)

- yl>k: 254 actual, 160.25 predicted (~159%)

Meanwhile, other glyphs inserted into *y>k, *ch>k, *o>k, and so forth might tug the overall results in the opposite direction, with each case having an influence proportional to the commonness of its bigram. I’m sure that part of the reason why yy>k and ny>k exceed their predicted counts so dramatically is that an even more common combination brings down the average probability for *y>k with respect to which the predictions were made.

- dy>k: 150 actual, 307.88 predicted (~49%)

By contrast, the equivalent transition for *o>k is relatively uncommon, which helps account for the fact that yo>k and no>k come so much closer to the predictions based on overall averages in that case, while the other transition deviates even more from its prediction.

- do>k: 22 actual, 84.59 predicted (~26%)

Thus, many disparate factors are likely to be playing into each of these figures. I’m not sure there’s any “pure” point of reference for a transition such as *o>k that’s truly neutral with regard to preceding glyphs; and without one, the method I’ve described is bound to be at least somewhat inaccurate even if the hypothesis in which it’s rooted is correct. As it stands, some of the individual results for a set such as y*>k or n*>k can look inconsistent enough to raise doubts about whether there’s any real pattern present at all. But the method I’ve described does still provide a kind of quantitative confirmation for the correlations I mentioned earlier—

- y*>k: 1053 actual, 809 predicted (~130%)

- y*>t: 764 actual, 377 predicted (~203%)

- n*>k: 500 actual, 425 predicted (~118%)

- n*>t; 423 actual, 231 predicted (~183%)

- e*>y: 3238 actual, 2472 predicted (~131%)

- ee*>y: 1258 actual, 918 predicted (~137%)

—as well as evidence for numerous others besides. Here’s a list of further general cases with actual-to-predicted ratios less than 3:5 or greater than 5:3 and actual counts exceeding 100, with all manifestations of each having an actual count or prediction of ten or higher given afterwards together with their individual ratios (predictions are rounded to nearest integer, which will occasionally give the illusion of a tie when values are only very close).

- n*>cKh: 129 actual, 54.75 predicted (~236%): nch>cKh (85:32), nSh>cKh (34:15)

- e*>d: 513 actual, 245.98 predicted (~209%): eo>d (380:105), ech>d (18:11); exception: ey>d (85:101)

- q*>k: 2610 actual, 1275.42 predicted (~205%): qo>k (2591:1273); qe>k (15:1)

- y*>p: 148 actual, 73.15 predicted (~202%): yo>p (108:57), yy>p (21:3)

- k*>ch: 166 actual, 88.67 predicted (~192%): ky>ch (66:43), ke>ch (65:17), kee>ch (23:15), kl>ch (10:6)

- e*>s: 109 actual, 57.06 predicted (~191%): eo>s (66:18), ey>s (35:30)

- cKh*>y: 122 actual, 66.26 predicted (~184%): cKhe>y (72:26), cKhd>y (24:20), cKhh>y (13:10)

- n*>t: 423 actual, 231.14 predicted (~183%): no>t (355:212), ny>t (57:8)

- n*>a: 239 actual, 134.70 predicted (~177%): nd>a (125:42), nch>a (47:33), nSh>a (20:13), ns>a (14:12); exception: no>a (7:14)

- q*>l: 248 actual, 972.28 predicted (~26%): qo>l (247:970)

- y*>y: 263 actual, 857.61 predicted (~31%): yd>y (122:527), yt>y (27:44), yk>y (26:48), ych>y (25:74), ySh>y (14:26), ycTh>y (10:21), yl>y (10:39); exceptions: yr>y (8:16), ys>y (4:15)

- e*>k: 173 actual, 491.19 predicted (~35%): eo>k (70:393), ed>k (8:10); exception: ey>k (91:80)

- ee*>a: 176 actual, 425.26 predicted (~41%): eed>a (106:320), ees>a (26:47), eek>a (11:27), eet>a (5:10); exceptions: eeo>a (12:4), eey>a (12:11)

- d*>k: 191 actual, 421.53 predicted (~45%): dy>k (150:307), do>k (22:85), dl>k (13:15)

- a*>k: 201 actual, 423.07 predicted (~48%): al>k (158:368), ar>k (18:23), ai>k (13:21)

- y*>d: 214 actual, 441.69 predicted (~48%): ych>d (90:138), yl>d (36:58), yo>d (26:159); exception: ySh>d (37:35)

- d*>t: 150 actual, 296.24 predicted (~51%): dy>t (126:244), do>t (23:47)

- e*>a: 581 actual, 1060.66 predicted (~55%): ed>a (392:841), ek>a (63:88), et>a (27:34), es>a (23:40); exceptions: eo>a (32:14), ep>a (12:8), ey>a (11:13)

- l*>y: 336 actual, 565.77 predicted (~59%): ld>y (168:301), lk>y (69:89), lch>y (40:76), lSh>y (26:29), lt>y (4:13), ll>y (3:10)